Unicode

What is Unicode?

Unicode is a universal character encoding standard that is maintained by the Unicode Consortium, a standards organization founded in 1991 for the internationalization of software and services. Officially called the Unicode Standard, it provides the basis for "processing, storage and interchange of text data in any language in all modern software and information technology protocols." Unicode now supports all the world's writing systems, both modern and ancient, according to the consortium.

The most recent edition of the Unicode Standard is version 15.0.0, which includes encodings for 149,186 characters. The standard bases its encodings on language scripts -- the characters used for written language -- rather than on the languages themselves. In this way, multiple languages can use the same set of encodings, resulting in the need for fewer encodings. For example, the standard's Latin script encodings support English, German, Danish, Romanian, Bosnian, Portuguese, Albanian and hundreds of other languages.

Along with the standard's character encoding, the Unicode Consortium provides descriptions and other data about how the characters function, along with details about topics such as the following:

- Forming words and breaking lines.

- Sorting text in different languages.

- Formatting numbers, dates and times.

- Displaying languages written from right to left.

- Dealing with security concerns related to "look-alike" characters.

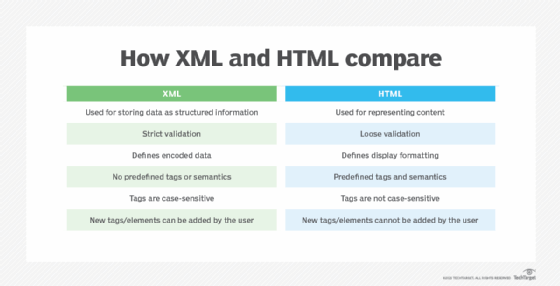

The Unicode Standard defines a consistent approach to encoding multilingual text that enables interoperability between disparate systems. In this way, Unicode enables the international exchange of information in a consistent and efficient manner, while providing a foundation for global software and services. Both HTML and XML have adopted Unicode as their default system of encoding, as have most modern operating systems, programming languages and internet protocols.

The rise of Unicode

Before Unicode was universally adopted, different computing environments relied on their own systems to encode text characters. Many of them started with the American Standard Code for Information Interchange (ASCII), either conforming to this standard or enhancing it to support additional characters. However, ASCII was generally limited to English or languages using a similar alphabet, leading to the development of other encoding systems, none of which could address the needs of all the world's languages.

Before long, there were hundreds of individual encoding standards in use, many of which conflicted with each other at the character level. For example, the same character might be encoded in several different ways. If a document was encoded according to one system, it might be difficult or impossible to read that document in an environment based on another system. These types of issues became increasingly pronounced as the internet continued to gain in popularity and more data was being exchanged across the globe.

The Unicode Standard started with the ASCII character set and steadily expanded to incorporate more characters and subsequently more languages. The standard assigns a name and a numeric value to each character. The numeric value is referred to as the character's code point and is expressed in a hexadecimal form that follows the U+ prefix. For example, the code point for the Latin capital letter A is U+0041.

Unicode treats alphabetic characters, ideographic characters and other types of symbols in the same way, helping to simplify the overall approach to character encoding. At the same time, it takes into account the character's case, directionality and alphabetic properties, which define its identity and behavior.

The Unicode Standard represents characters in one of three encoding forms:

- 8-bit form (UTF-8). A variable-length form in which each character is between 1 and 4 bytes. The first byte indicates the number of bytes used for that character. The first byte of a 1 byte character starts with 0. A 2 byte character starts with 110, a 3 byte character starts with 1110, and a 4 byte character starts with 11110. UTF-8 preserves ASCII in its original form, providing a 1:1 mapping that eases migrations. It is also the most commonly used form across the web.

- 16-bit form (UTF-16). A variable-length form in which each character is 2 or 4 bytes. The original Unicode Standard was also based on a 16-bit format. In UTF-16, characters that require 4 bytes, such as emoji and emoticons, are considered supplementary characters. Supplementary characters use two 16-bit units, referred to as surrogate pairs, totaling 4 bytes per character. The 16-bit form can add implementation overhead because of the variability between 16-bit and 32-bit characters.

- 32-bit form (UTF-32). A fixed-length form in which each character is 4 bytes. This form can reduce implementation overhead because it simplifies the programming model. On the other hand, it forces the use of 4 bytes for all characters, no matter how few bytes are needed, increasing the impact on storage resources.

The 16-bit and 32-bit forms both support little-endian byte serialization, in which the least significant byte is stored first, and big-endian byte serialization, in which the most significant byte is first. In addition, all three forms support the full range of Unicode code points, U+0000 through U+10FFFF, which totals 1,114,112 possible code points. However, the majority of common characters in the world's chief languages are encoded in the first 65,536 code points, which are known as the Basic Multilingual Plane, or BMP.

Read this primer for developers on how binary and hexadecimal number systems work.