cognitive computing

What is cognitive computing?

Cognitive computing is the use of computerized models to simulate the human thought process in complex situations where the answers might be ambiguous and uncertain. The phrase is closely associated with IBM's cognitive computer system, Watson.

Computers are faster than humans at processing and calculating, but they've yet to master some tasks, such as understanding natural language and recognizing objects in an image. Cognitive computing is an attempt to have computers mimic the way the human brain works.

To accomplish this, cognitive computing uses artificial intelligence (AI) and other underlying technologies, including the following:

- Expert systems.

- Neural networks.

- Machine learning.

- Deep learning.

- Natural language processing (NLP).

- Speech recognition.

- Object recognition.

- Robotics.

Cognitive computing uses these processes in conjunction with self-learning algorithms, data analysis and pattern recognition to teach computing systems. The learning technology can be used for sentiment analysis, risk assessments and face detection. In addition, cognitive computing is particularly useful in fields such as healthcare, banking, finance and retail.

How cognitive computing works

Systems used in the cognitive sciences combine data from various sources while weighing context and conflicting evidence to suggest the best possible answers. To achieve this, cognitive systems include self-learning technologies that use data mining, pattern recognition and NLP to mimic human intelligence.

This article is part of

What is enterprise AI? A complete guide for businesses

Using computer systems to solve the types of problems that humans are typically tasked with requires vast amounts of structured and unstructured data fed to machine learning algorithms. Over time, cognitive systems can refine the way they identify patterns and process data. They become capable of anticipating new problems and modeling possible solutions.

For example, by storing thousands of pictures of dogs in a database, an AI system can be taught how to identify pictures of dogs. The more data a system is exposed to, the more it's able to learn and the more accurate it becomes over time.

To achieve those capabilities, cognitive computing systems must have the following attributes:

- Adaptive. Systems must be flexible enough to learn as information changes and goals evolve. They must digest dynamic data in real time and adjust as the data and environments change.

- Interactive. Human-computer interaction is a critical component in cognitive systems. Users must be able to interact with cognitive machines and define their needs as they change. The technologies must also be able to interact with other processors, devices and cloud platforms.

- Iterative and stateful. Cognitive computing technologies can ask questions and pull in additional data to identify or clarify a problem. They must be stateful in that they keep information about similar situations that have occurred previously.

- Contextual. Understanding context is critical in thought processes. Cognitive systems must understand, identify and mine contextual data, such as syntax, time, location, domain, user requirements, user profiles, tasks and goals. The systems can draw on multiple sources of information, including structured and unstructured data and visual, auditory and sensor data.

Examples and applications of cognitive computing

Cognitive computing systems are typically used to accomplish tasks that require parsing large amounts of data. For example, in computer science, cognitive computing aids in big data analytics, identifying trends and patterns, understanding human language and interacting with customers.

The following examples show how cognitive computing is used in various industries:

- Healthcare. Cognitive computing can manage and analyze large amounts of unstructured healthcare data such as patient histories, diagnoses, conditions and journal research articles to make recommendations to medical professionals. The goal is to help doctors make better treatment decisions, as cognitive technology expands their capabilities and assists with decision-making.

- Retail. In retail environments, cognitive technologies analyze basic information about the customer, along with details about the product the customer is considering and can provide the customer with personalized suggestions.

- Banking and finance. Cognitive computing in the banking and finance industry analyzes unstructured data from different sources to gain more knowledge about customers. NLP is used to create chatbots that communicate with customers to help improve operational efficiency and customer engagement.

- Logistics. Cognitive computing aids in areas such as warehouse management, warehouse automation, networking, and internet of things and other edge computing devices.

- Human cognitive augmentation. This interdisciplinary field integrates cognitive computing, psychology, neuroscience, engineering and other disciplines to collaboratively create innovative apps and tools for cognitive enhancement. Cognitive technologies are used to surpass the average level of mental capabilities. Examples include memory recall assistance and cognitive-enhancing brain implants, which can be particularly beneficial for individuals with conditions such as attention-deficit/hyperactive disorder or amnesia.

- Customer service. By employing intelligent chatbots and virtual assistants, cognitive computing can augment the customer service experience. These advanced systems can decipher natural language inquiries, enabling tailored interactions and faster resolution of customer queries.

Advantages of cognitive computing

Advantages of cognitive computing include positive outcomes for the following:

- Analytical accuracy. Cognitive computing is proficient at juxtaposing and cross-referencing structured and unstructured data from a variety of sources, such as images, videos and text.

- Business process efficiency. Cognitive technology can recognize patterns when analyzing large data sets.

- Customer interaction and experience. The contextual and relevant information that cognitive computing provides to customers through tools like chatbots improves customer interactions. A combination of cognitive assistants, personalized recommendations and behavioral predictions enhances the customer experience.

- Employee productivity and service quality. Cognitive systems help employees analyze structured and unstructured data to identify data patterns and trends.

- Troubleshooting and error detection. With the ability to conduct pattern analysis and tracking, cognitive computing models are highly effective at detecting errors in software code and encryption algorithms for security systems. In sophisticated technical frameworks, cognitive computing promotes faster and more precise problem-solving by enabling error detection in business processes.

Disadvantages of cognitive systems

Cognitive technology also has downsides, including the following:

- Security challenges. Cognitive systems need large amounts of data to learn from, which can make them more vulnerable to cybersecurity breaches. Organizations using the systems must properly protect the data, especially if it's health, customer or any type of personally identifiable information.

- Long development cycle length. These systems require skilled development teams and a considerable amount of time to develop software that makes them useful. The systems themselves need extensive and detailed training with large data sets to understand given tasks and processes. That process might hinder companies with smaller development teams from integrating cognitive computing processes into their applications due to the complexity and level of expertise required.

- Slow adoption. The development lifecycle is one reason for slow adoption rates. Smaller organizations might anticipate the difficulty of implementing cognitive systems and therefore avoid them.

- Negative environmental impact. The process of training cognitive systems and neural networks consumes a lot of power, resulting in a sizable carbon footprint.

For more on artificial intelligence in the enterprise, read the following articles:

4 main types of artificial intelligence: Explained

Top AI and machine learning trends

Types of AI algorithms and how they work

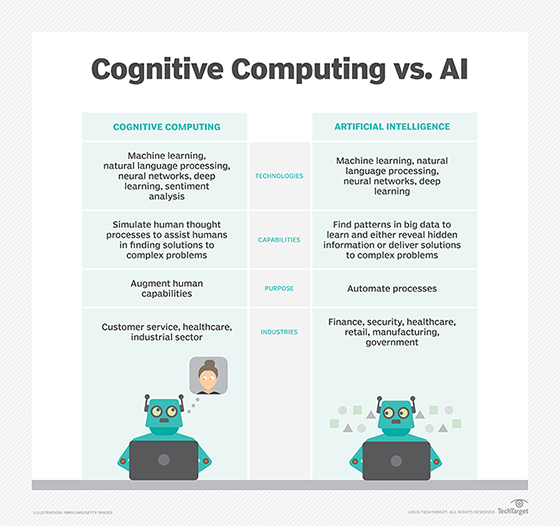

How cognitive computing differs from AI

The term cognitive computing is often used interchangeably with AI. But there are differences in the purposes and use cases of the two technologies.

Cognitive computing. The term cognitive computing is typically used to describe AI systems that simulate human thought for augmenting human cognition. Human cognition involves real-time analysis of the real-world environment, context, intent and many other variables that inform a person's ability to solve problems.

AI. AI is the umbrella term for technologies that rely on data to make decisions. These technologies include -- but aren't limited to -- machine learning, neural networks, NLP and deep learning systems. With AI, data is fed into an algorithm over a long period of time so that the system learns variables and can predict outcomes. Applications based on AI include intelligent assistants, such as Amazon Alexa and Apple Siri, and driverless cars.

A number of AI technologies are required for a computer system to build cognitive models. These include machine learning, deep learning, neural networks, NLP and sentiment analysis.

In general, cognitive computing is used to assist humans in decision-making processes. AI relies on algorithms to solve a problem or identify patterns in big data sets. Cognitive computing systems have the loftier goal of creating algorithms that mimic the human brain's reasoning process to solve problems as the data and the problems change.

While artificial intelligence can mimic human intelligence to a certain extent, there are notable discrepancies between AI and human cognition.