data labeling

What is data labeling?

Data labeling is the process of identifying and tagging data samples commonly used in the context of training machine learning (ML) models. The process can be manual but is usually performed or assisted by software. Data labeling helps machine learning models make accurate predictions and is also useful in processes such as computer vision, natural language processing (NLP) and speech recognition.

The process starts with raw data, such as images or text files, that is collected and then one or more identifying labels are applied to each segment of data to specify the data's context in the ML model.

What is data labeling used for?

Data labeling is an important part of data preprocessing for ML, particularly for supervised learning. In supervised learning, a machine learning program is trained on labeled input data. Models are trained until they can detect the underlying relationship between the input data and the output labels. In this setting, data labeling helps the model process and understand the input data.

A model training to identify animals in images, for example, might be provided with multiple images of various types of animals from which it would learn the common features of each, enabling it to correctly identify the animals in unlabeled images.

Data labeling is also used when constructing ML algorithms for autonomous vehicles. Autonomous vehicles, such as self-driving cars, need to be able to tell the difference between objects in their way so that they can process the external world and drive safely. Data labeling is used to enable the car's artificial intelligence (AI) to tell the differences among a person, the street, another car and the sky by labeling the key features of those objects or data points and looking for similarities between them.

Like supervised machine learning, computer vision, which deals with how computers interpret digital images and videos, uses data labeling to help identify raw data.

NLP, which enables a program to understand human language, uses data labeling to extract and organize data from text. Specific text elements are identified and labeled in an NLP model for understanding.

How does data labeling work?

ML and deep learning systems often require massive amounts of data to establish a foundation for reliable learning patterns. The data they use to inform learning must be labeled or annotated based on data features that help the model organize the data into patterns that produce a desired answer.

The data labeling process starts with data collection, then moves on to data tagging and quality assurance, and ends when the model starts training:

- In the data collection step, raw data that is useful to train a model is collected, cleaned and processed.

- In either a manual or software-aided effort, the data is then labeled with one or more tags, which give the machine learning model context about the data.

- The quality of the machine learning model depends on the quality and the preciseness of the tags. Properly labeled data becomes the "ground truth."

- The machine learning model is then trained using the labeled data.

The labels used to identify data features must be informative, specific and independent to produce a quality algorithm. A properly labeled data set provides a ground truth that the ML model uses to check its predictions for accuracy and to continue refining its algorithm.

A quality algorithm is high in accuracy, referring to the proximity of certain labels in the data set to the ground truth.

Errors in data labeling impair the quality of the training data set and the performance of any predictive models it's used for. To mitigate this, many organizations take a human-in-the-loop approach, maintaining human involvement in training and testing data models throughout their iterative growth.

What are common types of data labeling?

The type of data labeling is defined by the medium of the data being labeled. For example, these include the following:

- Image and video labeling. An example of this type of data labeling is computer vision, where tags are added to individual images or video frames. This finds use in areas such as diagnostic cases in healthcare, object recognition and automated cars.

- Text labeling. An example of this is for NLP, where tags are added to words for interpretation of human languages. NLP has its use in chatbots or sentiment analysis.

- Audio labeling. An example of this is for speech recognition, where audio segments are broken down and labeled. This is useful for voice assistants and speech-to-text transcriptions.

Benefits of data labeling

Benefits that come with data labeling include the following:

- Accurate predictions. As long as data scientists input properly labeled data, machine learning models are able to use that data as a ground truth to make accurate predictions when presented with new data after training.

- Data usability. Models can be optimized and produce more usable data by reducing the number of model variables.

Challenges of data labeling

Data labeling can be expensive and time-consuming if done manually, however. Even if an organization takes an automated approach, the process still needs to be set up.

Likewise, data labeling can face the challenge of human error. For example, data might be mislabeled due to coding or manual entry errors. This might lead to inaccurate data processing, modeling or ML bias.

Best practices for data labeling

Best practices to follow in data labeling include the following:

- Collect diverse data. To be labeled data sets should be as diverse as possible to prevent bias.

- Ensure data is representative. Collected data should be as specific as the model wants to be accurate.

- Provide feedback. Regular feedback helps ensure the quality of the data labels.

- Create a label consensus. This should measure the agreement rate between both human or machine labelers.

- Auditing labels. This helps verify label accuracy.

Methods of data labeling

An enterprise can use several methods to structure and label its data. The options range from using in-house staff to crowdsourcing and data labeling services. These options include the following:

- Crowdsourcing. A third-party platform gives an enterprise access to many workers at once.

- Outsourcing. An enterprise can hire temporary freelance workers to process and label data.

- Managed teams. An enterprise can enlist a managed team to process data. Managed teams are trained, evaluated and managed by a third-party organization.

- In-house staff. An enterprise can use its existing employees to process data.

- Synthetic labeling. New project data is generated using already existing data sets. This process increases data quality and time efficiency but requires more computing power.

- Programmatic labeling. Scripts are used to automate the data labeling process.

There is no singular optimal method for labeling data. Enterprises should use the method or combination of methods that best suits their needs. Some criteria to consider when choosing a data labeling method are as follows:

- The size of the enterprise.

- The size of the data set that requires labeling.

- The skill level of employees on staff.

- The financial restraints of the enterprise.

- The purpose of the ML model being supplemented with labeled data.

A good data labeling team should ideally have domain knowledge of the industry an enterprise serves. Data labelers who have outside context guiding them are more accurate. They should also be flexible and nimble, as data labeling and ML are iterative processes, always changing and evolving as more information is taken in.

Importance of data labeling

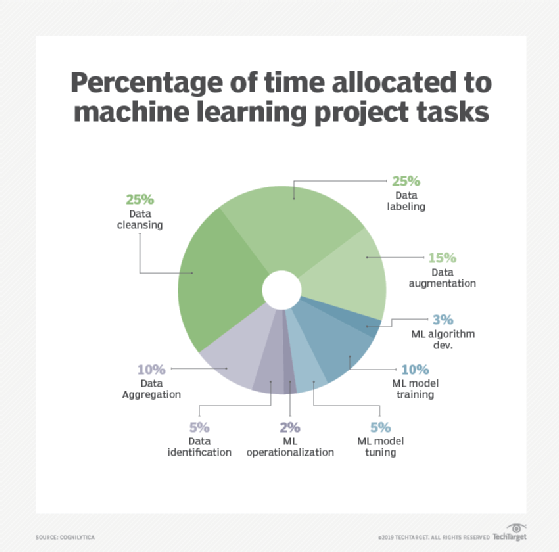

Costs spent by enterprises on AI projects typically go toward preparing, cleaning and labeling data. Manual data labeling is the most time-consuming and expensive method, but it may be warranted for important applications.

Critics of AI speculate that automation will put low-skill jobs, such as call center work or truck and Uber driving, at risk because rote tasks are becoming easier to perform for machines. However, some experts believe that data labeling may present a new low-skill job opportunity to replace the ones that are nullified by automation because there is an ever-growing surplus of data and machines that need to process it to perform the tasks necessary for advanced ML and AI.

If data is not properly labeled, then the ML model won't be able to perform at its best, which then brings down the model's accuracy.

Learn about 18 different data science tools, including tools for data preprocessing.